Hello everyone!

I am Jasjeet Singh (also known as jasje on IRC and 07jasjeet on GitHub). I am an undergraduate student pursuing information technology at UIET, Panjab University, Chandigarh, India. This year I participated in Google Summer of Code under MetaBrainz and worked on implementing a feed section in the new and upcoming ListenBrainz Android app derived from the ListenBrainz website. Along with the implementation of the original feed section, I have also added new features to the section that are currently available only on the app. My mentor for this project was Akshat Tiwari. (akshaaatt on IRC)

Proposal

For readers who are not acquainted with ListenBrainz, it is a website where you can integrate multiple listening services and view and manage all your listens on a unified platform. One can not only peruse their own listening habits but also find other interesting listeners and interact with them. Moreover, one can even interact with their followers by sending recommendations or pin recordings. Feed is an integral part of developing this social interaction between users and so, my GSoC proposal was aiming to bring it to the ListenBrainz Android using compose as the primary UI toolkit and Kotlin as the primary language.

Pre-Community Bonding Period

The pre-community bonding period refers to the time frame between when I first hopped into the MetaBrainz community and when my GSoC proposal was selected. I had done a lot for ListenBrainz Android by the time the GSoC results were announced. For example, I implemented Year in Music, Explicit Theme Switch, fixed and redesigned the Listen Scrobble Service, fixed multiple bugs related to BrainzPlayer, ANRs, and a lot more. I became well aware of the codebase and how things work in MetaBrainz even before the proposals were submitted.

Community Bonding Period

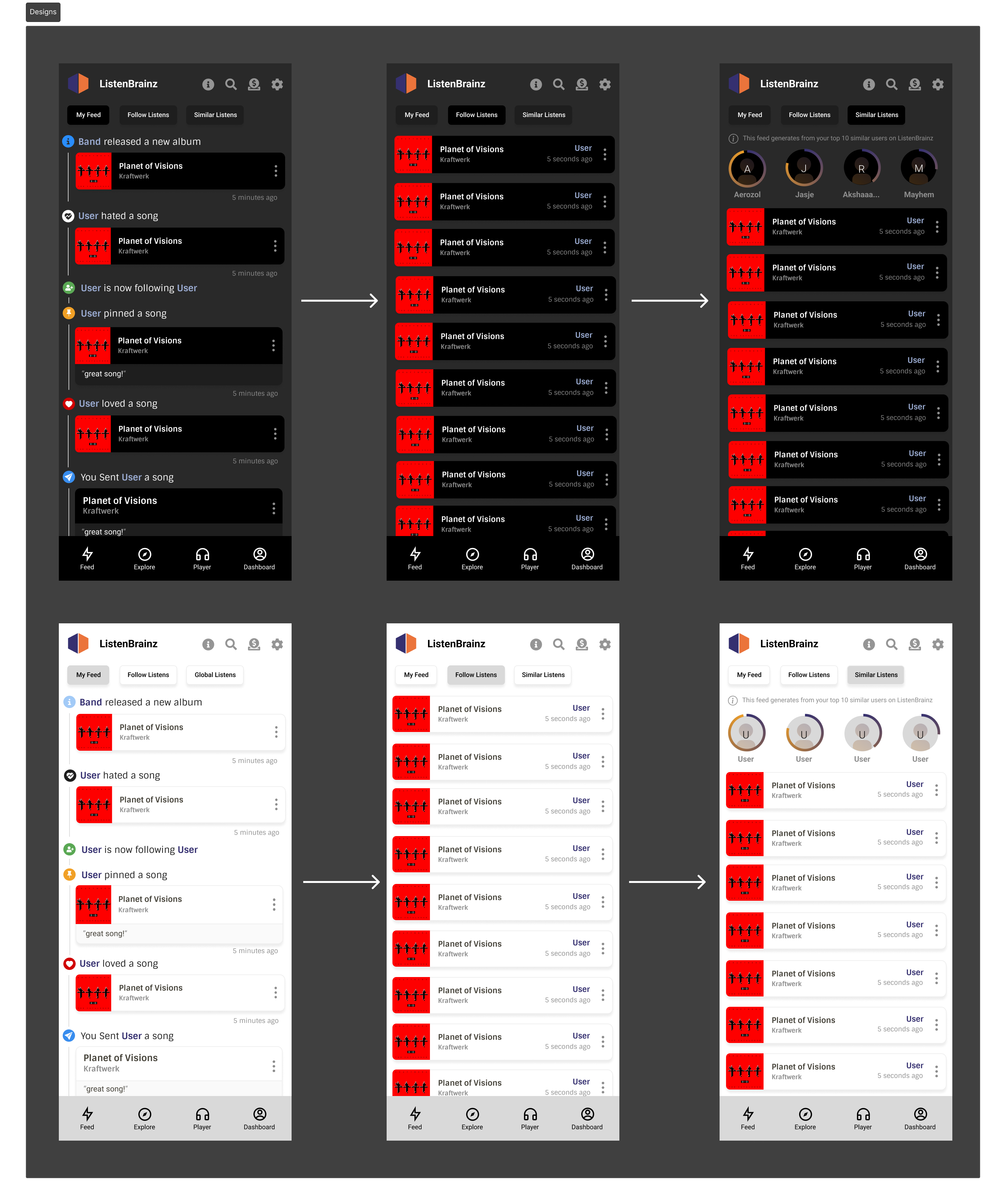

During the community bonding period, I tried to maintain the pace, but due to exams, I could only fix two minor bugs. While the bug fixing didn’t go as expected, the designing part went great with the help of MetaBrainz’s in-house designer, aerozol, we initialised the design system for ListenBrainz Android and also created mockups of the feed section in Figma.

Below are the designs we tentatively put forth during the community bonding period. But, as of now, there have been crucial design changes to all screens, especially the Similar Listens screen that will be completed after GSoC.

Apart from designing the mockups, two new ideas for feed were established and finalised. Follow Listens and Similar Listens were the new additions to feed for which endpoints in the server didn’t exist. I took up the task and started its implementation during this period.

Coding Period (Before midterm)

This project was divided into two phases. The first phase primarily included the creation of a search user feature in the app and follow listens and similar listens endpoints for the server.

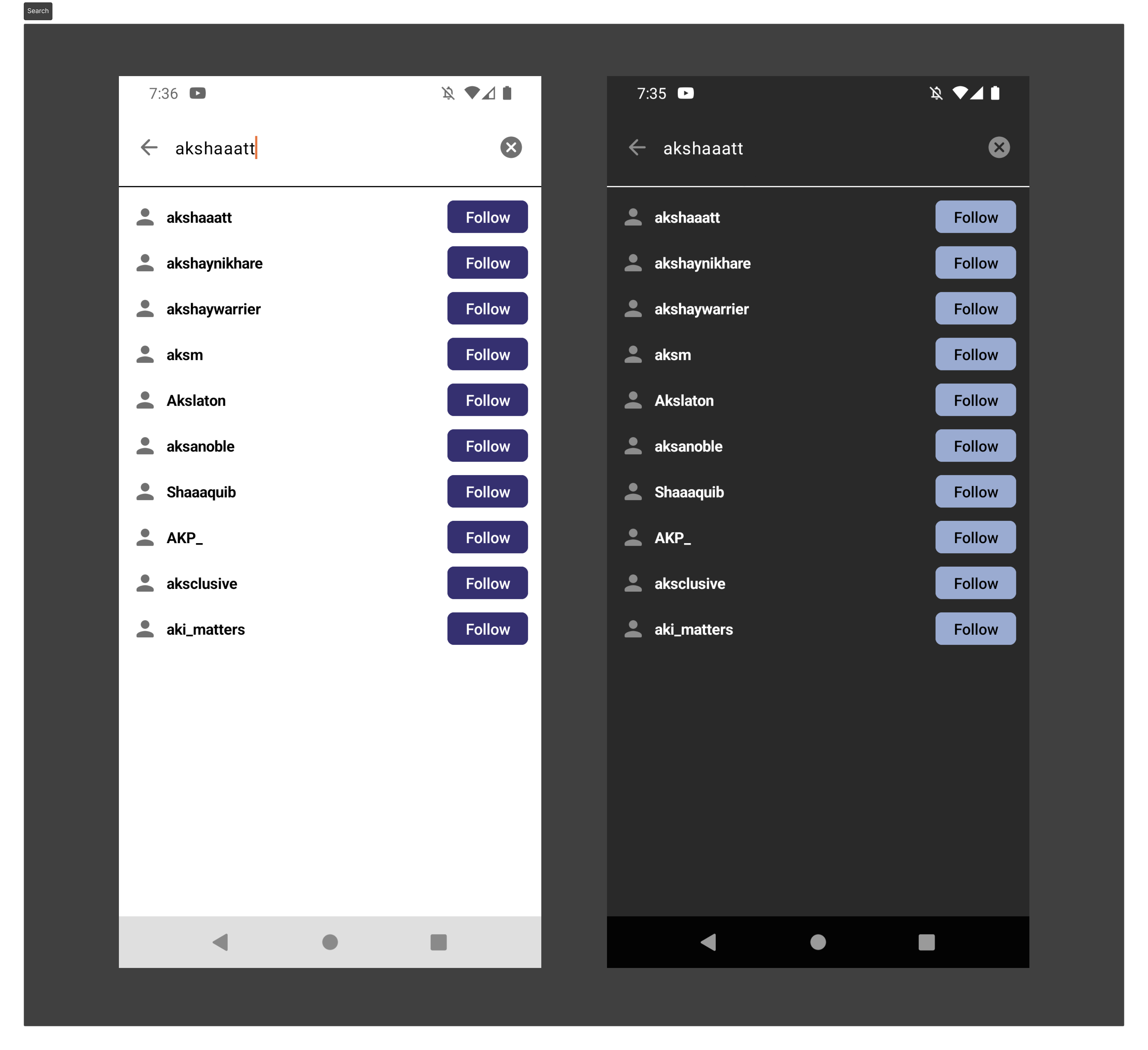

The search feature was an integral part because of the upcoming implementations on top of it. In this project, only users can be searched and followed, but its scope is a lot bigger than just that, which I cannot reveal in this post. Coming back, searching is a heavy operation for the server. Searching whenever a user types a letter leads to the user hitting rate limits and the server taking excess load. To solve this, debouncing was implemented with a delay of 700 milliseconds.

This screen also consisted of the follow button, which is a critical reusable component that has app-wide use. We had a new approach for the implementation of this button. This button worked optimistically. The button toggles state as soon as it is clicked, which is optimistic because it expects the request to the server to be successful. But, in case of failure, this button reverts its state back and shows a message to the user that the operation has failed. This gives the user a perception of blazing-fast functionality, which is what we wanted to achieve.

A lot of core components were also established, like a streamlined error handling class called ResponseError, which is easier to package and show in the UI, and a base unit test class with the Mockito-Kotlin framework.

This is a demonstration of how this feature works.

This feature was completed in the span of three PRs:

- Phase 1.1 (Social service, repository and tests setup with error handling class)

- Phase 1.2 (View model, UI and unit tests for view model)

- Phase 1.3 (View models tests with updated

BaseUnitTestclass)

Apart from the Android side of things, on the server, the development of the Follow Listens endpoint (GET /user/<user_name>/feed/events/listens/following) was initiated, which provides all the listens from users that are followed by the requesting authority. While developing this endpoint, we realised that the implementation was flawed as the search space wasn’t limited to any specific time period, nor was the count of listens per user. This meant the endpoint would eventually time out if available data dated far back and listens of only one follower would be returned if the data was available. For this, lucifer (mentor as well) suggested that we limit the search space to 7 days and have pagination based on timestamps alone.

Tests were also created for the endpoint after a painful debugging session that was resolved after puny sleep() call thanks to lucifer. Setting up follow listens endpoint took a lot longer than expected, so the development of similar listens endpoint was pushed to phase 2.

Relevant links to my work on Follow Listens endpoint:

- Pull Request

- Ticket (For relevant details)

Coding Period (Phase 2: After midterm)

The main big bang of the season, i.e., feed, was started in full force after the midterm evaluation, although planning was done beforehand with a few changes.

From the beginning, our aim was to make all components reusable, and that trend followed through this period. Another aim was to preserve compose preview for composables by hoisting states up in the compose hierarchy. With these two in mind, we set about creating a reactive and scalable UI for the project.

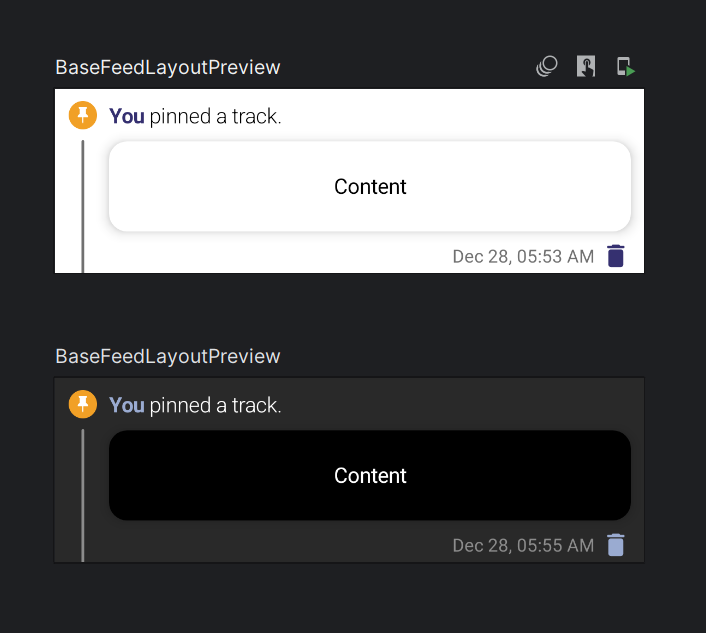

Base Feed Layout

To make a feed event reusable, BaseFeedLayout composable was established, which is widely used for creating all kinds of feed events.

@Composable

fun BaseFeedLayout(

modifier: Modifier = Modifier,

eventType: FeedEventType,

event: FeedEvent,

parentUser: String,

onDeleteOrHide: () -> Unit,

isHidden: Boolean = event.hidden == true,

content: @Composable () -> Unit

)

One of the complex parts was how to adjust the horizontal line that runs parallel to the Content part as the height of the Content changes. This could be done by first compose calculating its bounds and laying out the composable, and then we would measure the composable and relay all the content. But this approach was inefficient and caused guaranteed recomposition on layout, which makes scaling impossible. To bypass this, we made use of SubcomposeLayout, which pauses composition, calculates the bounds of Content and feeds the bounds to our horizontal line, and lays them together. This approach saved the recomposition that we would incur if we used the previously defined approach and actually improved performance by up to 2X.

Feed Event Type class

Ultimately, BaseFeedLayout composable is responsible for displaying a feed event based on the parameters. FeedEvent is the data class for our raw JSON response from the server, while FeedEventType is an enum class that defines the ambiguous properties of a FeedEvent.

enum class FeedEventType (

val type: String, // String identifier for the event.

@DrawableRes val icon: Int, // Feed icon for the event.

val isPlayable: Boolean = true, // Can the event be played?

val isDeletable: Boolean = false, // Can the event be deleted?

val isHideable: Boolean = false, // Can the event be hidden?

) {

/* Our Events */;

// Our helper functions

@Composable

fun Tagline(/* Params */) // Tagline for the respective feed event.

@Composable

fun Content(/* Params */) // Content for the respective feed event

}The parameters and functions of the FeedEventType class contain all the ambiguous properties that remain static and are not provided by the server response.

Now, getting a feed event is as simple as writing this line of code, which demonstrates the power of polymorphism:

eventType.Content(/* params */)With this function set, it was time to stitch it up and have a beta look at it. Automatic paging is an important function that every app in today’s era has. For that, we employed the View Pager jetpack library, and with a few hiccups here and there, paging was a success. Soon after, the whole feed section came together, and the beta UI was ready.

Performance optimisations for feed UI

While building this feature, performance was a big concern. To limit the number of states on a screen, an array of steps were taken, such as state hoisting and creating a MutableStateList instead of assigning individual states to a repeating composable. Replacing MutableStateList with MutableStateMap wherever feasible.

Compose complier reports were generated, and classes were marked as stable or immutable to make most of the components skippable and stable, which led to better overall performance.

Remote Playback

The next big task to accomplish was the refactoring of old code and the integration of remote players, mainly Spotify App Remote and Youtube Music API, into Feed. Previous implementations of these players were limited to only one screen, weren’t reusable, and had some flaws. We were onto fixing the same.

First, we began with the Youtube Music API, which was simple enough to mould into a dependency that could be injected, and that is what we did by creating a RemotePlaybackHandler class.

Contrary to Youtube Music, Spotify App Remote is an SDK, which means a lot of work went into making it reusable. One complex challenge we faced was converting the SDK’s AsyncTask functions to suspend functions so that Kotlin Coroutines could be used to call these functions synchronously. Another challenge was to convert Subscriptions used by the SDK to Flows and manage them as well to avoid memory leaks. With this, we added the Spotify app remote’s important interactive functions to the RemotePlaybackHandler class and used this new class to play tracks app-wide.

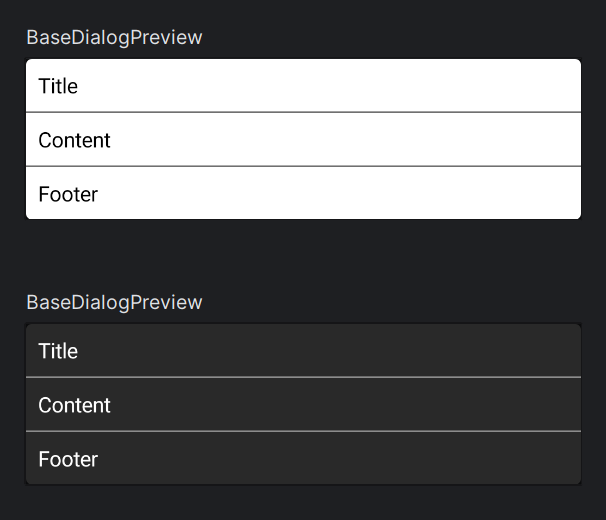

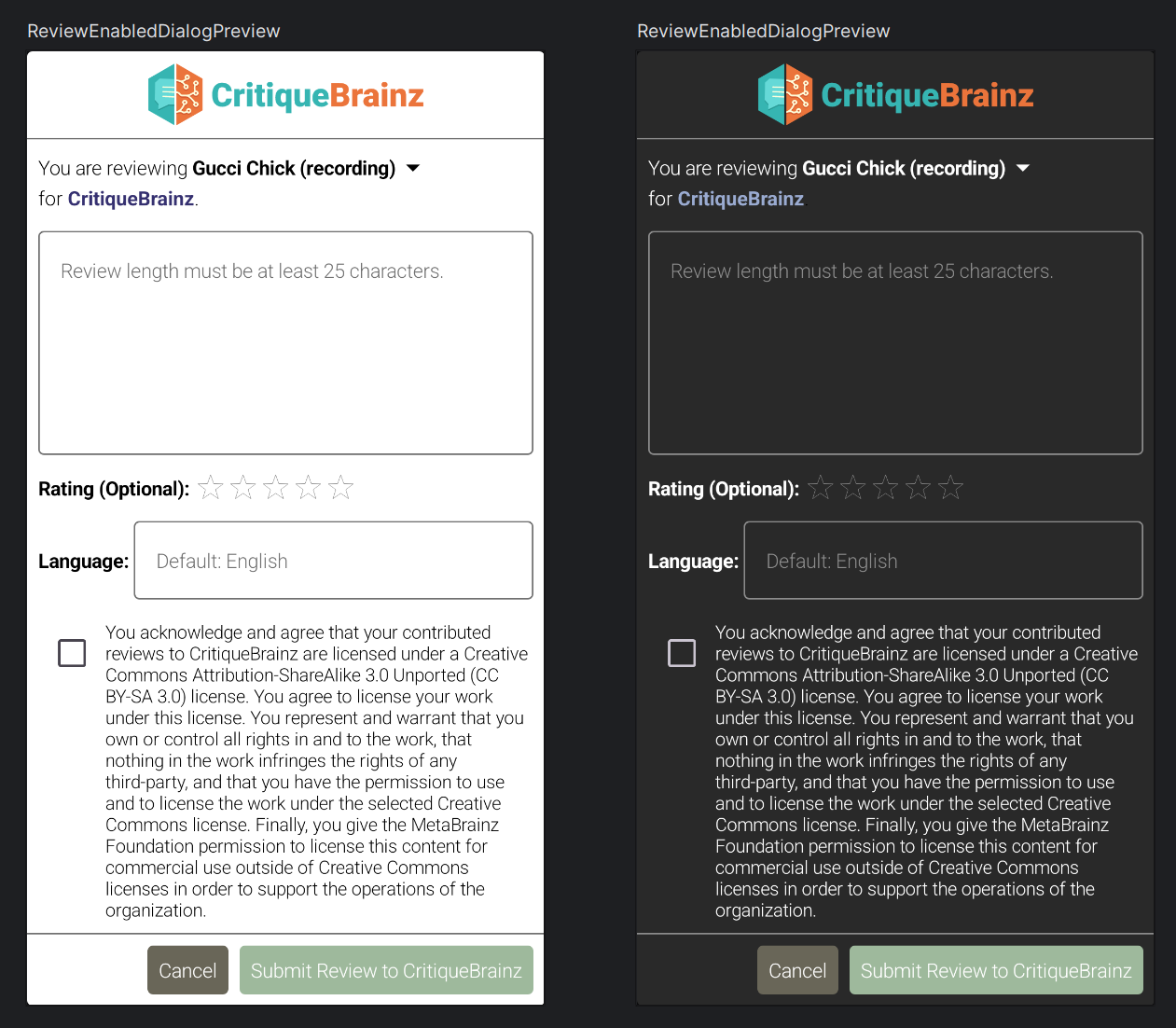

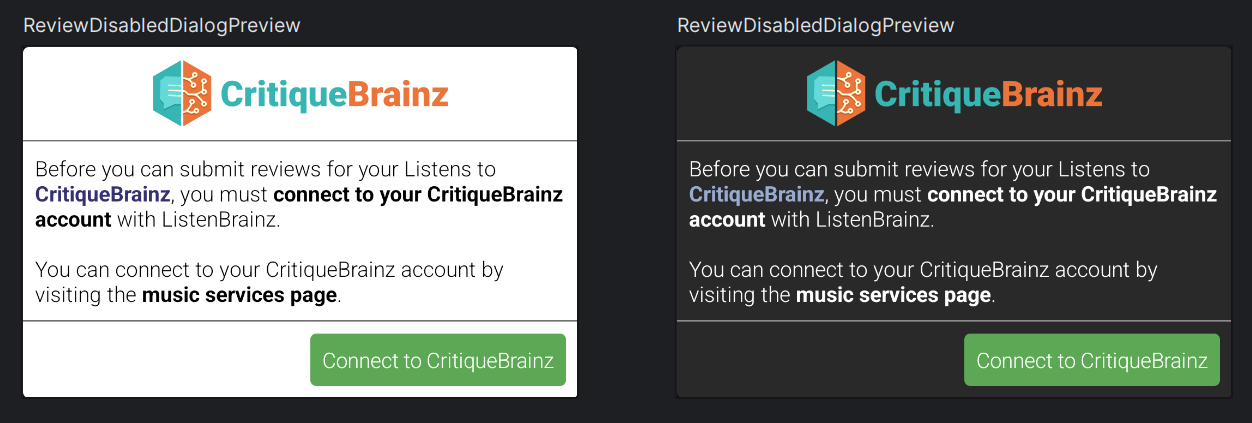

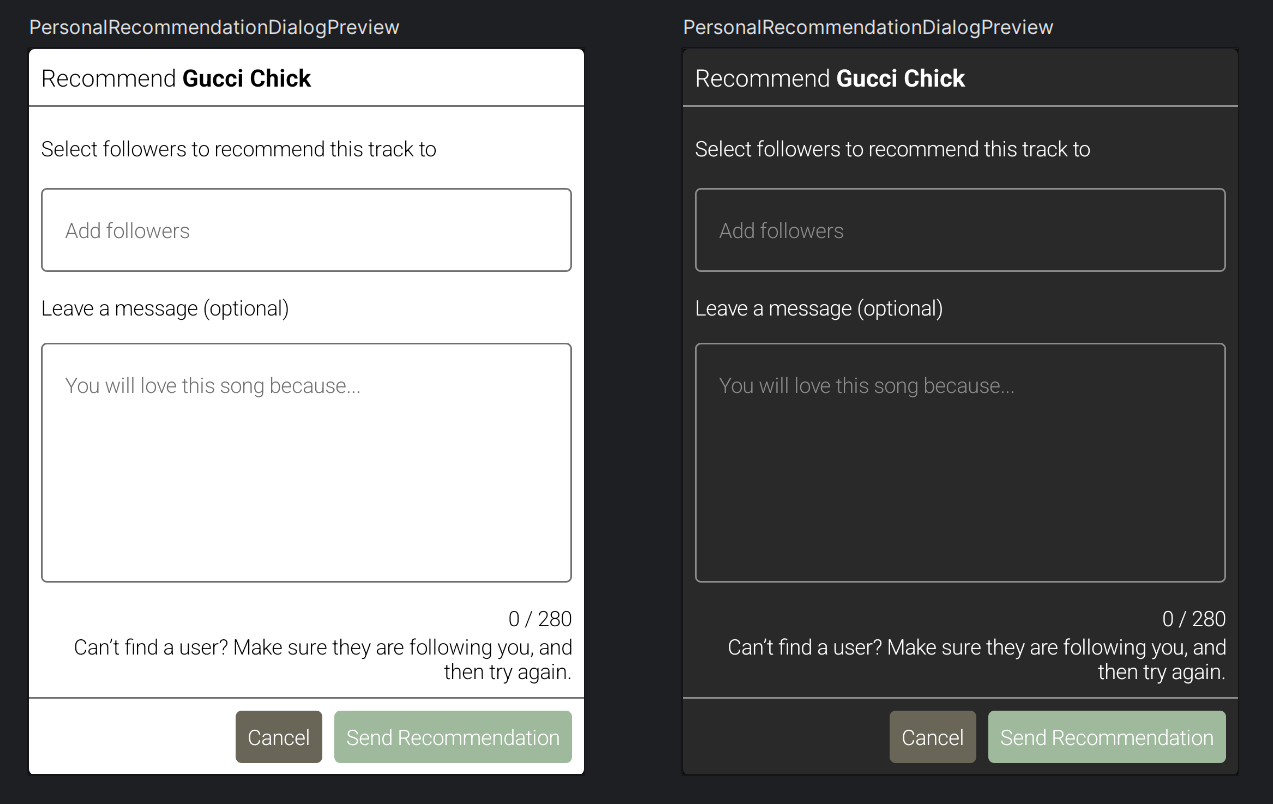

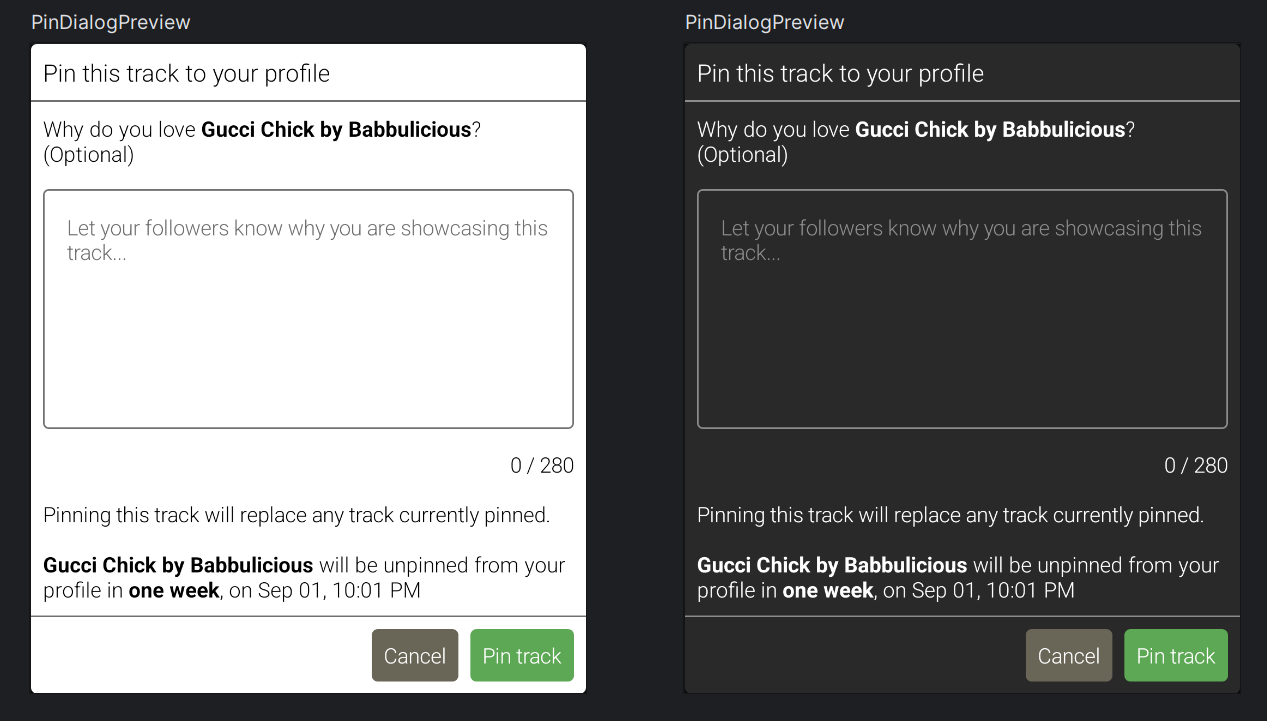

Dialogs

With this done, all that was left were the dropdowns and dialogs. There were four dialogs within the scope of this project. But the foundation for what’s to come had to be established early. With this, I started breaking down all the dialogs on the website to see a common pattern. And thus BaseDialog composable was created which was the core foundation for other dialogs.

@Composable

fun BaseDialog(

onDismiss: () -> Unit,

title: @Composable BoxScope.() -> Unit,

content: @Composable ColumnScope.() -> Unit,

footer: @Composable BoxScope.() -> Unit

)

And thus came the rest of the dialogs. The following are the renders from compose previews:

All these dialogs share the same color scheme, text styles, paddings, sizes and of course, reusables composables such as buttons, text fields and search fields.

Performance optimisations for dialogs

While these dialogs came out pretty and aligned with the website, maintaining their state was challenging. After some thought, we came up with a solution where only one state was required to control the dialogs and only one dialog per dialog type was required for all three screens while maintaining previews. This meant a significant performance improvement over other alternatives and no loss of code quality.

This feature was covered in the span of 6 PRs:

- Phase 2.1 (Feed Service, repository and Base Feed UI setup)

- Phase 2.2 (Feed UI Setup with all three screens)

- Compose Stability and minor updates

- Phase 2.3 Part 1 (Remote Players Refactor Part 1)

- Phase 2.3 Part 2 (Remote Players Refactor Part 2)

- Phase 2.4 (All Dialogs)

On server side of things, we had completed the Similar Listens endpoint (GET /user/<user_name>/feed/events/listens/similar) fairly quickly due to it being similar to the Follow Listens endpoint. Similar listens are listens from users that are similar to you in terms of listening habits. There is a high possibility that you may discover new tracks while meddling around on this page.

Relevant links to my work on Similar Listens endpoint:

- Pull Request

- Ticket (For relevant details)

Surplus Jobs

Apart from only working on the GSoC project, I have been actively taking this opportunity to work as a team member and not just a contributor. These are some of the jobs I’ve worked on as well:

- Made listen submission core-code reusable and employed Work Manager to persist tasks with dependency injection. (Work)

- Implemented bulk submission which resulted in efficiency when offline listens were captured. (Work)

- Better theme switching. (Work)

- Fixing metadata of core data classes used for Listen Submission. (Work)

And many more.

What’s left?

There are a few tasks that could not be completed due to my mismanagement and the vast scope of the project that will be completed after GSoC. These tasks have been given a green flag from my mentor for postponement as of writing this blog. These tasks are:

- As mentioned earlier, Similar Listens screen incurred significant changes in its UI design and hence, new intuitive UI will be designed as per the requirements.

- Unit tests for feed and UI tests for both, search and feed.

Experience

I am really glad that I got mentorship from akshaaatt, lucifer, and the rest of the MetaBrainz team. These are people whom I look up to, as they are people who are not only good at their crafts but are also very good mentors. I really enjoy contributing to ListenBrainz because almost every discussion is a roundtable discussion. Anyone can chime in, suggest, and have very interesting discussions on the IRC. I am very glad that my open-source journey started with MetaBrainz and its wholesome community. It is almost every programmer’s dream to work on projects that actually matter. I am so glad that I had the good luck to work on a project that is actually being used by a lot of people and also had the opportunity to work on a large codebase where a lot of people have already contributed. It really made me push my boundaries and made me more confident about being good at open source!