Hello!

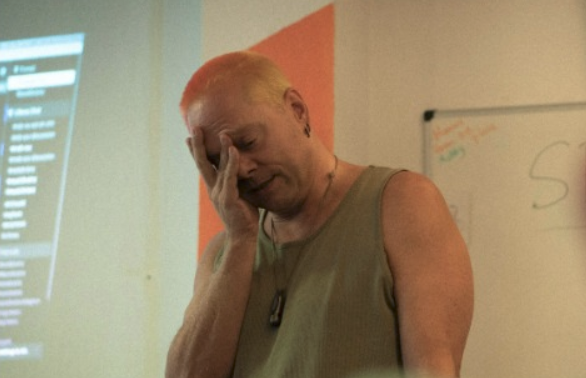

I’m sad to report that we had an incident on one of the ListenBrainz production database servers, where someone on our team might have accidentally run our test suite on our production server.

I’m sorry for this screw-up.

In plain English, we ended up deleting all the listens for our users and had to restore from backup. The bad news is that we lost some listens, for which we feel terrible (more details below).

The good news is that this is by design — our systems are setup to survive the loss of a whole day’s of data in case of a disaster like this one. While one part of the system failed, the backup systems worked as expected.

While this is not great, the alternatives are to spent a lot more money on more fully redundant systems for testing, staging and production. This setup would be a lot of work to install, to maintain and to pay for, even though it would end up sitting idle most of the time. We’re still a scrappy small non-profit that creates tremendous value for other projects, while still surviving on a budget that is less than most CEO’s salaries. Stuff can simply still go wrong for us, and this is a fact of life.

Of course, we’re going to take steps to ensure that this doesn’t happen again and we’re going to build in protections that a simple mistake cannot take out our production database.

The restore is complete and our systems are back to normal now. We lost about 20 hours of listens, between these two timestamps:

November 2, 2023 12:00:00 AM UTC (epoch 1698883200) – November 2, 2023 19:44:52 PM UTC (epoch 1698954292)

Again, sorry for the hassle and the data loss. We’ll do better going forward.

P.S. We don’t know who caused this to happen, we’ve not been able to determine, but we assume it was a mistake.

It’s okay guys. *pat on the back*