It really bugged me that it proved impossible to finish the huge BookBrainz importer project last year. Fortunately MetaBrainz (and Google) gave me the chance to continue working on my 2023 project during this Summer of Code, thank you! Our goal is still to import huge external datasets into the BookBrainz database schema.

Last year I worked on the backend services to transform and insert simple entities into the database. This year’s goal was to support importing multiple related entities and exposing the imported data on the website. We can now import entities (on the backend), which can be reviewed and approved by our users with ease.

If you want to know the full story, I recommend you to start with my previous blog post to learn more details about the existing importer infrastructure and last year’s problems. Or just read on if you are only interested in the advanced stuff which I did this year.

What is the purpose of this project again?

BookBrainz still has a relatively small community and contains less entities than other comparable databases. Therefore we want to provide a way to import available collections of library records into the database while still ensuring that they meet BookBrainz’ high data quality standards.

From a previous GSoC project in 2018, the database schema already contains additional tables set up for that purpose, where the pending imports will await a user’s approval before becoming a fully accepted entity in the database.

The project will require processing very large data dumps (e.g. MARC records or JSON files) in a robust way and transforming entities from one database schema to the BookBrainz schema. Additionally the whole process should be repeatable without creating duplicate entries.

My previous GSoC project from 2023 has achieved this for standalone entities, but it is not very useful to import works or editions without being able to link them to their authors, for example. So there is still a good amount of work ahead to adapt this process to import a full set of related entities and deal with all the edge cases.

Pending and accepted entities

Entities (for example authors, editions and works) which have been extracted from an external data source (that is a database dump or an API response) go through various states during the import process. For this post only the last two states are relevant for us:

Pending Entity: The transformed entity has been imported into the BookBrainz database schema, but has not been approved (or discarded) by a user so far. Additional data of the parsed entity which does not fit into the schema will be kept in a freeform JSON column.

Accepted Entity: The imported entity has been accepted by a user and now has the same status as a regularly added entity.

Pending entities currently only have aliases, identifiers and basic properties (such as annotation, languages, dates) which are specific per entity type. Allowing them to also have relationships could potentially lead to relationships between pending and accepted entities, because BookBrainz uses bidirectional relationships (new relationships also have to be added to the respective relationship’s target entity). In order to prevent opening that can of worms, relationships are currently stored in the additional freeform data of pending entities.

While this was an intentional decision to reduce the complexity of the project in 2018, it is not a viable long term solution. Importing for example a work without at least a relationship to its author saves little work for the users, so we really need to support relationships now.

| Accepted Entity (Standard) | Pending Entity (2018) | Pending Entity (now) | |

|---|---|---|---|

| Basic properties | ✓ | ✓ | ✓ |

| Aliases | ✓ | ✓ | ✓ |

| Identifiers | ✓ | ✓ | ✓ |

| Relationships [1] | ✓ | ✗ | ✓ |

| BBID | ✓ | ✗ | ✓ |

| Revision number | ✓ | ✗ | ✗ |

| Freeform data | ✗ | ✓ | ✓ |

Table: Current and proposed features of pending entities

Currently the data of pending entities is stored in the regular bookbrainz.*_data tables (for example bookbrainz.edition_data) that are also used for accepted entities.

Only the additional freeform data and other import metadata (such as the source of the data) are stored in a separate table.

In order to support relationships between two pending entities, we have to assign them BBIDs if we also want to store relationships in the regular tables.

This schema change is necessary because the source and target entity columns of the bookbrainz.relationship table each contain a BBID.

Refers to regular bidirectional relationships between two entities as well as implicit unidirectional relationships (which are used for Author Credits, Publisher lists and the link between an Edition and its Edition Group). ↩︎

Project goals (aka what I didn’t finish last summer)

Last year I modernized the whole codebase of the backend from 2018, fixed bugs and rewrote parts of it from scratch. From the original goals from 2023, the following have been remaining:

- Support importing series entities (which were only introduced in 2021)

- Update database schema to support relationships between pending entities (and author credits!)

- Resolve an entity’s external identifiers (for example an OpenLibrary ID) to a BBID in order to create relationships between pending entities

- Advanced possibilities to repeat imports without creating duplicates (some of these are stretch goals)

While I have already updated most parts of the importer backend in 2023, the following additional changes are still necessary to get the whole importer project into a clean and working state again:

- The outdated website changes bookbrainz-site#201 which handle pending imports have to be rebased onto the current

masterbranch, which also involves using the new environment with Webpack and Docker. - Entity validators from

bookbrainz-site(which are used to validate entity editor form data) have been duplicated and adapted for the importer backend. Ideally generalized versions of these validators should be moved into bookbrainz-data-js and used by both.

I am going to start with the website changes, which are the most critical since they finally allow us to see and use our pending entities which we can already import since last year.

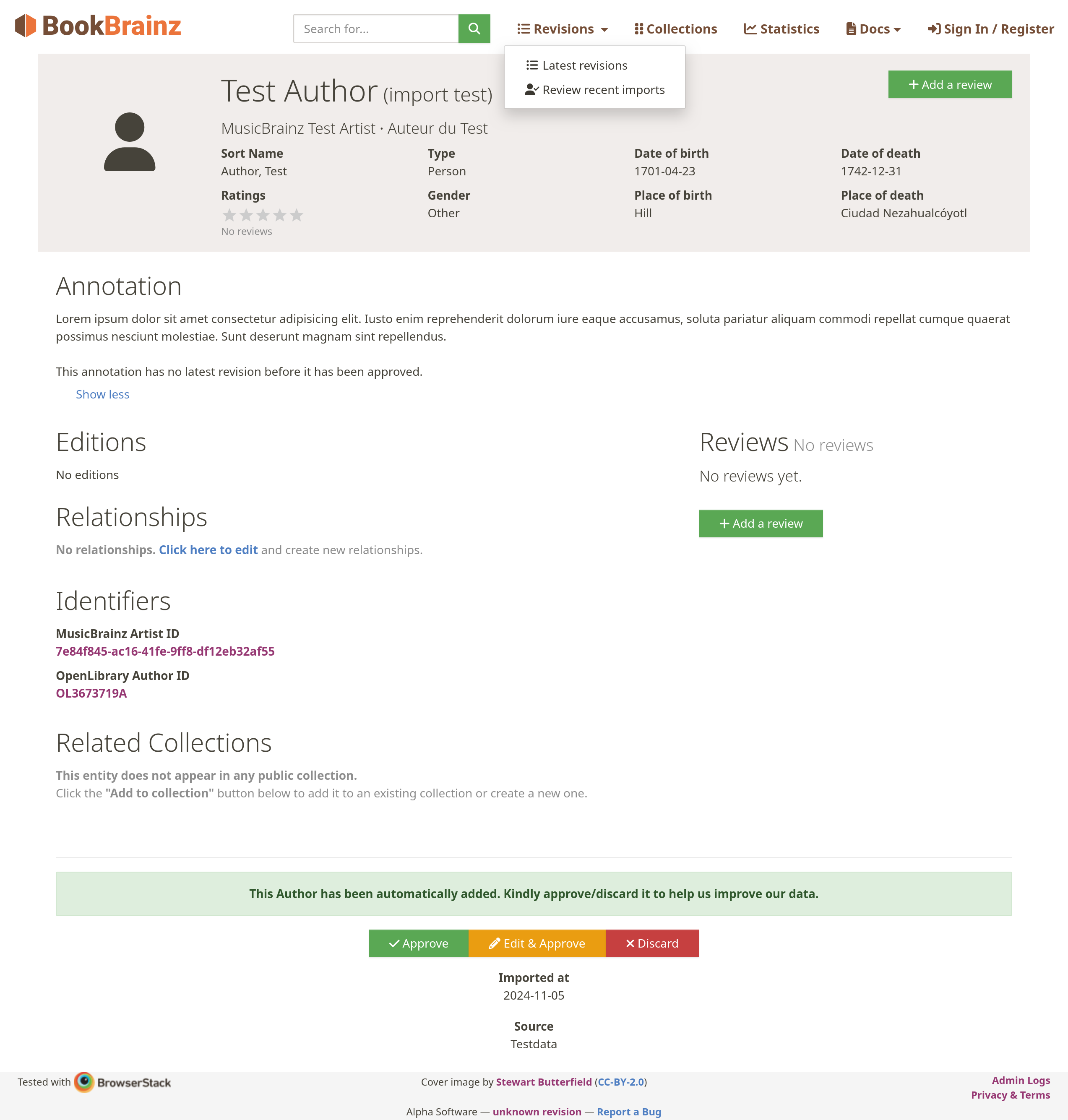

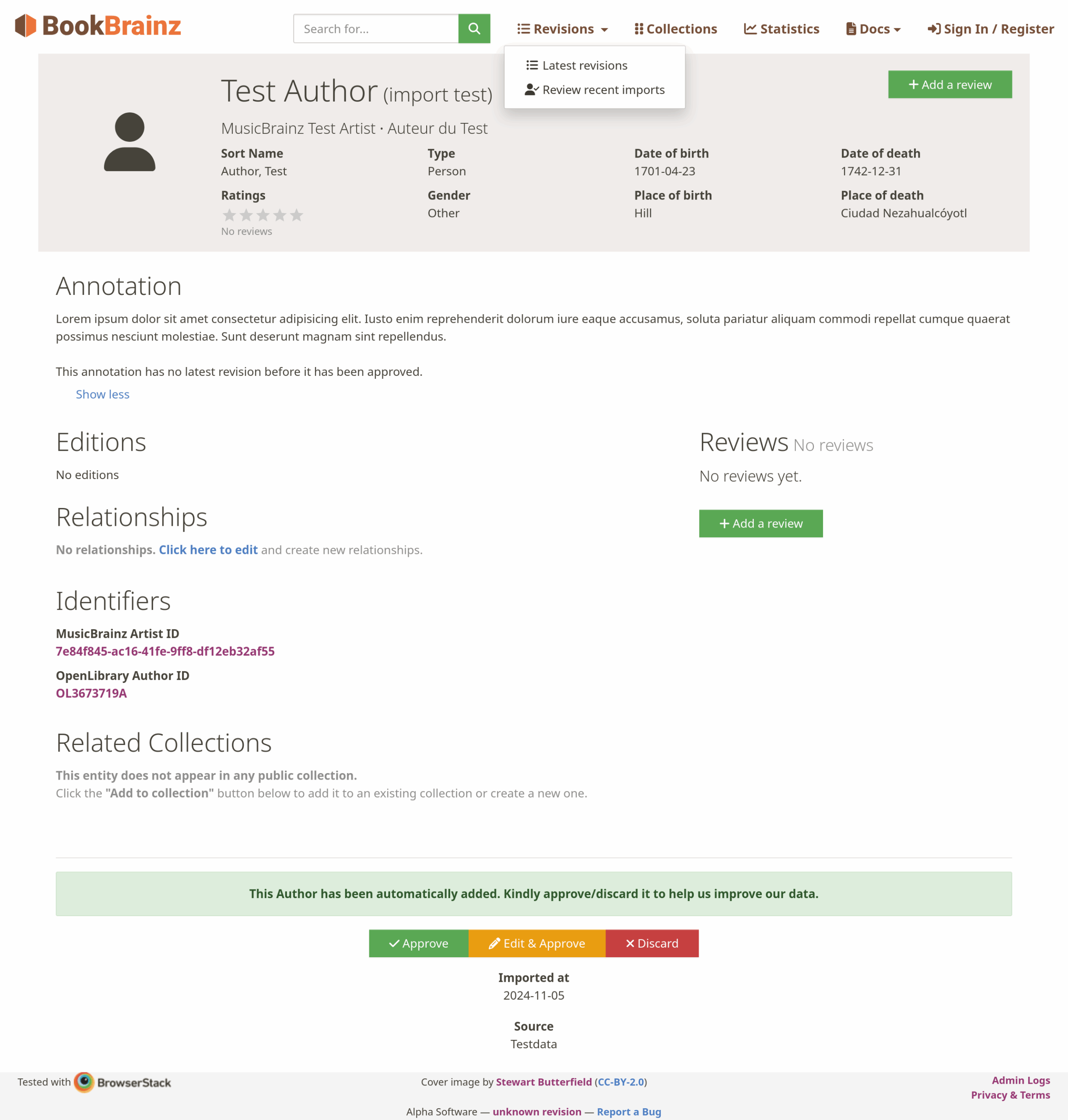

Pages for imported entities

As soon as a pending entity is available in the database, the user should be able to browse it on the website. The display page looks similar to that of an accepted entity, but provides different action buttons at the bottom. Here the user can choose whether they want to discard, approve or edit and approve the entity.

Once an entity gets approved, the server assigns it a BBID and creates an initial entity revision to which the approving user’s browser is redirected.

While a pending entity can be promoted to an accepted entity by a single vote, the pending import will only be deleted if it has been discarded by multiple users. This is done in order to prevent losing pending entities (which have no previous revision that can be restored!) forever by accident. And usually we do not want to restore discarded entities when we decide to repeat the import process.

The good news is that these pages and actions have already been implemented in 2018, the bad news is that it is not guaranteed that these were fully working and that the website has changed a lot since.

Surprisingly there were not many conflicts when I merged the current master version into the feature branch.

It took a few more changes and migrations to newer versions of dependencies to get the website to build again.

Once the pending imports could be displayed, I also had to fix the approval action.

Pull requests: bookbrainz-site#1096 (rebase and fix import pages), bookbrainz-data#319 and bookbrainz-site#1103 (fix approval action)

Unified entity validators

There was still a lot of redundant code which made it harder to proceed with adding new features to the project. One of the two major offenders is the entity loading and transformation of editing form data (which we will address after the schema change), the other was the entity validation.

We had two nearly duplicate sets of entity validation functions in

bookbrainz-site

and bookbrainz-utils

which should be part of bookbrainz-data and then can be used by both.

So I copied the validators and their tests from bookbrainz-site into bookbrainz-data and made sure the tests still pass.

Then I refactored the validators to throw a ValidationError with the reason instead of just a boolean and adapted the tests to expect errors.

Outputting the reason was exclusive to the import validators, but it can also be useful to have more detailed errors for entity editor submission on the server.

Finally I also copied the type definitions for the validated entity sections from bookbrainz-utils and integrated the one importer-specific difference of the validators from bookbrainz-utils.

Pull requests: bookbrainz-data#316 (unified validators) and bookbrainz-utils#46 (usage for the importer backend)

BBIQ push command

So far I had been testing the website with pending entities which I added through the import queue with the OpenLibrary producer. In order to cover all properties an entity type can have and to make it easier to quickly test specific cases, I implemented a shortcut: The BBIQ (BookBrainz Import Queue management) CLI from last year got a new subcommand which allows you to directly push the contents of a JSON file into the queue.

Especially in the light of the upcoming entity import schema change, I wanted to make the current importer setup more battle-proofed before I am going break everything again. So I went ahead and created a few accompanying JSON files with simple test data, which is guaranteed to contain values for all properties an entity can currently have.

Pull request: bookbrainz-utils#50

It quickly proved to be a useful tool to find a few remaining bugs and properties which were not supported yet. The most important one of them was…

Annotations

Originally annotations were not implemented for imported entities, because they have a last_revision_id INT NOT NULL column.

Since an import does not have a revision, it is not possible to create a valid annotation row for it.

Now we have made the column nullable and can import annotation data by inserting it into the database with last_revision_id = NULL.

Of course the website also had to be adapted to display the annotation, preserve it on approval and load it into the entity editor for the edit and approve process. Once the pending entity has been approved, it has a revision whose ID can be set for the annotation.

Pull requests: bookbrainz-data#320, bookbrainz-site#1107 and bookbrainz-data#321

Series entity imports

With the deeper understanding I had gained while fixing the website for all other entity types, I finally felt confident enough to implement the missing support for pending series entities.

I already had to add series import tables to the database earlier, in order to prevent the “recent imports” page from crashing the server. The code forced me to do that since it iterated over all entity types, looking for their import tables, including series which did not exist in 2018.

Adding the missing server routes, pages and data transformation functions for imported series was pretty straightforward. Since imported entities can’t have relationships yet, I had to handle the special case that a series has no defined items (which does not occur for regular series).

Pull requests: bookbrainz-site#1109 and bookbrainz-data#321

Pending entities with BBIDs

Now we finally get to the core of this year’s project: Pending entities are still identified by a numeric ID instead of a BBID like regular entities. Besides making it impossible for pending entities to be used in relationships, this requires separate logic to load data (for display or editing) and to store it (after approval). Having this separate logic which is almost identical but shares almost no code with regular entities is very annoying as every change for an entity type has to be done in two places.

Assigning imports a BBID required a schema change.

Previously the SQL schema of the entry table for pending entities (which are linked to their data via bookbrainz.*_import_header tables for each entity type) looks as follows:

CREATE TABLE IF NOT EXISTS bookbrainz.import ( id SERIAL PRIMARY KEY, type bookbrainz.entity_type NOT NULL );

When we alter the id column (and all foreign columns which refer to it) to be a UUID column, the bookbrainz.import table is basically identical to the bookbrainz.entity table.

So I suggested to combine both tables and use an additional column to distinguish imports and accepted entities:

CREATE TABLE bookbrainz.entity ( bbid UUID PRIMARY KEY DEFAULT public.uuid_generate_v4(), type bookbrainz.entity_type NOT NULL, is_import BOOLEAN NOT NULL DEFAULT FALSE -- new flag );

Combining the tables (and dropping the bookbrainz.import table) has two advantages:

-

We no longer have to move pending entities into the

bookbrainz.entitytable once they have been accepted, we can simply update the newis_importflag. -

The

source_bbidandtarget_bbidcolumns of thebookbrainz.relationshiptable have a foreign key constraint to thebbidcolumn ofbookbrainz.entity. Having a separate table for imports would have violated that constraint. (Alternatively we would have needed a new flag for both relationship columns in order to know whether the BBID belongs to an accepted entity or to a pending import.)

Additionally I also combined the entity views which are used to load and update entities with their import view counterparts.

The only difference with respect to these views is that imports have an empty (NULL) revision number.

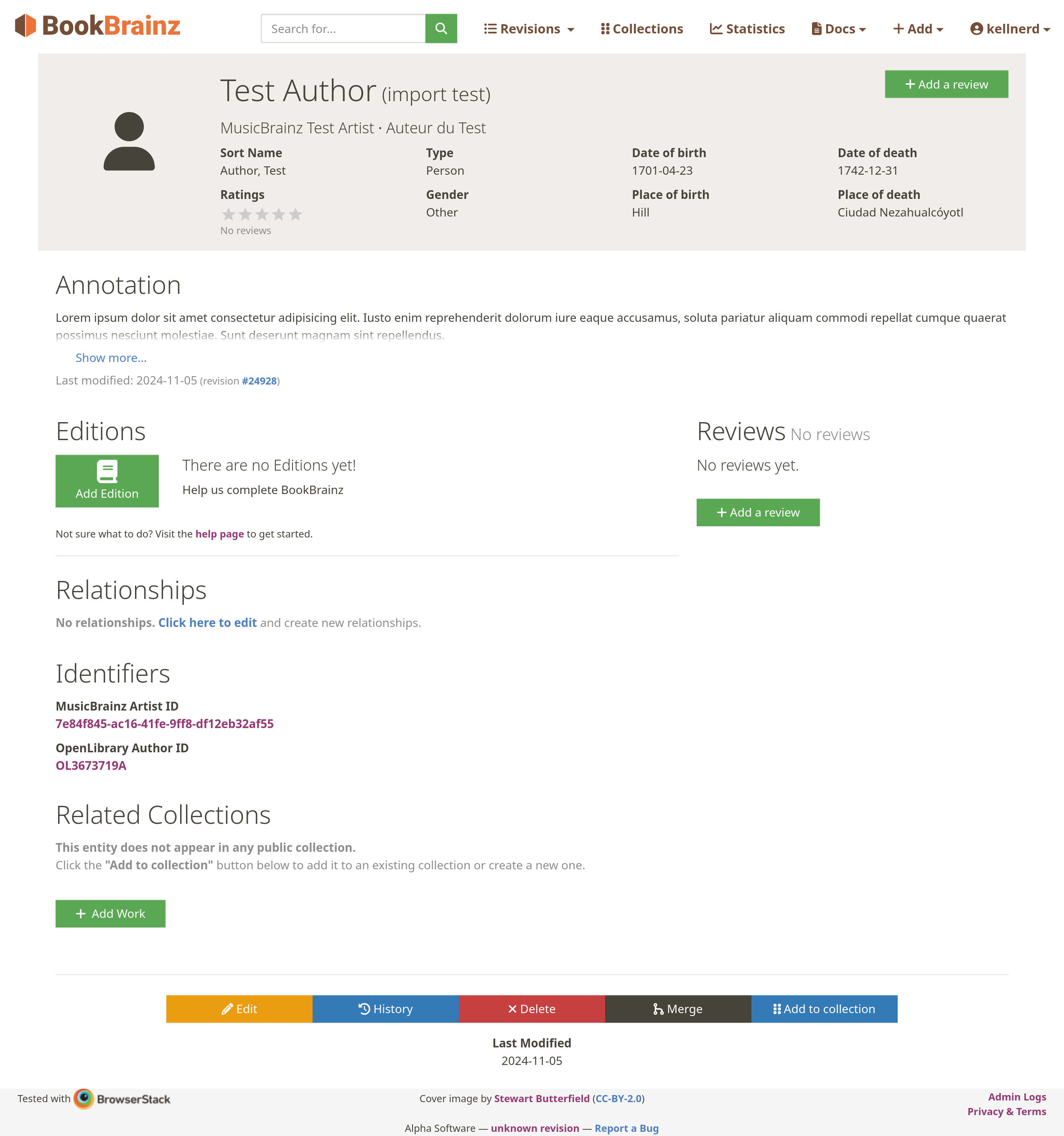

With the schema change in place, we can treat pending entities the same way as regular entities in almost all places. This allowed me to get rid of the separate display pages and controllers, we can now load and display pending entities using the regular entity pages and controllers. Only minimal changes have been necessary to also load import metadata and to display different actions (depending on whether the entity has a revision).

Since pending entities already get a BBID assigned, I could also rewrite the approval action to preserve this BBID. Rather than collecting all properties of the pending entity data and creating a new entity (with new BBID), we simply create an entity header and a revision which link to the existing data.

Now we can also load imports into the entity editor without any changes, because we are using the combined entity views! In order to make editing of pending entities (Edit & Approve) work, we only have to do minimal changes in the edit handler:

- Approve the import for an initial revision before creating a new Edit revision as usual.

- Exit early if the entity is approved without additional changes and avoid creating an empty revision.

Finally I had the enormous pleasure to delete a ton of unused code.

Pull requests: bookbrainz-site#1136 (schema and website), bookbrainz-data#323 (ORM adaption) and bookbrainz-utils#51 (adaption)

Result and Outlook

Pending entities of all types can now be viewed, approved and discarded via the website. They are sharing the display pages with regular entity display pages (which only show different actions in the footer for them). Even better, they are also sharing the same data loading and updating logic. This means that they can also be edited just like regular entities, and only on submission they get approved behind the scenes (to create an initial revision), all changes by the user are stored as a separate revision already.

All of this became possible because pending entities have BBIDs now and share DB tables and views with regular entities. For most parts of the website there is no longer a difference between pending and accepted entities except the latter having a revision number.

Finally it is possible for pending entities to have relationships to other entities, regardless whether these are pending or not. The website can already handle these, only the importer backend needs to be updated to actually import entities with relationships.

So we are still not fully done yet, the BBIQ consumer has to support inserting relationships into the database and our producer implementations (for example the OpenLibrary producer) have to output them. Supplying the necessary utility functions to resolve an entity’s external identifiers (for example an OpenLibrary ID) to a BBID is the next step towards that goal.

And once that has been achieved, we can focus on making repeated import processes more intelligent to reuse more existing data and to even suggest updates for already accepted entities. By combining the importer project with the entity merge algorithm, we can even try to suggest updates for accepted entities which have been edited in the mean time.

Thinking outside the box, we could think about how we can not only enrich BookBrainz data with these automatic imports, but also how we can contribute back data which has been approved and edited or merged to the external source, since we have the necessary external identifier and the revision history.

Final words

It was a great experience to participate in Summer of Code again. This year I intentionally decided to apply only for a 175 hour project, because the 350 hour project last year was a bit much. Although that means I could not realistically include all potential goals which I had thought about in my proposal, it was a good decision and gave me more flexibility.

I would like to thank you very much monkey, for mentoring me again over the last six months and being there to discuss things when I needed a second opinion. I am looking forward to us making the importer infrastructure production-ready after GSoC!

Thank you to all others who expressed interest in the project and asked questions. Once we release the importer project for beta testing, there will also be an announcement post with more details for website users.

All my pull requests for MetaBrainz repositories during GSoC 2024

These imports will be such a big step to getting BookBrainz on the map!! Can’t wait! Thank you kellnerd